HBR came up with an article

directed at people in the business: “Better questions to ask your data

scientists”(1).But is this the right approach?

As an analogy, when you go to the

see a doctor, do you think it makes more sense for the doctor to talk your

language or for you to speak in doctor-language (medical terms)?

By talking in plain language, the

doctor can uncover symptoms that you might not feel worth mentioning, that you

might not feel are important or related. Or do you know enough medicine to be

able to describe your symptoms properly and completely in medical terms?

I know I don’t know enough about

medicine, and even less about medical terms. Call me a chicken, but I’d rather

not get paracetamol/panadol for a headache that’s the beginning of a brain

tumour. ‘cluck!’

To me, a doctor consults with

you, the diagnosis is a collaborative process between 2 parties, but who

bridges the gap in terms of language makes a huge difference in the

outcome.

Would you rather be treated for

what you really have, or what you think you have?

Now, how does this relate to

“data science”?

I go back to the Drew Conway

definition of data science(2) which looks like this, but here the "data scientist" is shown as a unicorn:

A “data scientist” is someone

who, on top of hacking Skills/Computer Science and Mathematics/Statistics

skills has substantive domain knowledge. Basically, the “data scientist” should

be able to speak to the business in business language.

One of the things that I have

learnt while working with clients from various organisations and industries is

that, very often, the clients don’t mention issues they don’t think “data

science” can help them with. That’s not because these issues are not important

to them, but simply because they do not know that “data science” can help solve

them; they do not know what they do not know.

Similar to the case of getting an

accurate medical diagnosis that will help cure the underlying medical issues, I

believe that the data science process is in essence consultative. The best

outcomes are always from collaboration between the business and the “data

scientist”.

It is the role of the “data

scientist” to understand where the client is coming from, dig deeper, ask relevant

questions, know the data that is required to tackle these issues, and maximise

the benefits the client can get. For that, domain knowledge is critical. (And

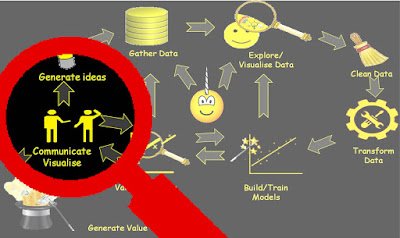

of course that’s just a small part of the “data science” process.(3))

Furthermore, the interactions and

collaboration between the business and the “data science” are not limited to

the “initial diagnostics stage”, but through-out the whole data science

process. Therefore a common language is very important to facilitate

collaboration, and in my opinion, it should be the “data scientist” who speaks

business language rather than the business speaking “data science language”.